For many research funders across the globe the decision-making process is somewhat lacking transparency. The applications go inside this “black-box”, and the decisions about the awards come out. The Russian Science Foundation took lead towards more transparency in its review process and discussed in great detail with diverse researchers community its AI-assisted toolkit of finding reviewers tested this year.

The report about the new system was presented by Andrei Blinov, RSF Deputy General Director, Head of the Program and Projects. To begin with, each application should be assigned to at least two to five reviewers who are highly qualified in the topic of the project and do not have a conflict of interest. The decision on who will get the application for evaluation is made by the panel chair of the expert council. Very often our panel chairs know these evaluators, and sometimes the applicants - if not in person, then through their works and publications.

On the one hand, this is extremely helpful: for example, the panel chair can immediately understand which evaluator really understands the subject of the application, regardless of whether the respective keywords are indicated in the reviewer profile or not. But there is a certain subjective factor. For example, the panel chair does not personally agree with the hypothesis of the applicant and will try to assign the proposal to so called “negative”, highly critical reviewers, while the application, which is desired to be awarded, will be assigned to more loyal, “positive” reviewers. At the same time, the duties of the panel chairs are so demanding that a responsible check of all reviewer assignments and the lists of their publications in order to understand that a topic is suitable for them, is not an easy task.

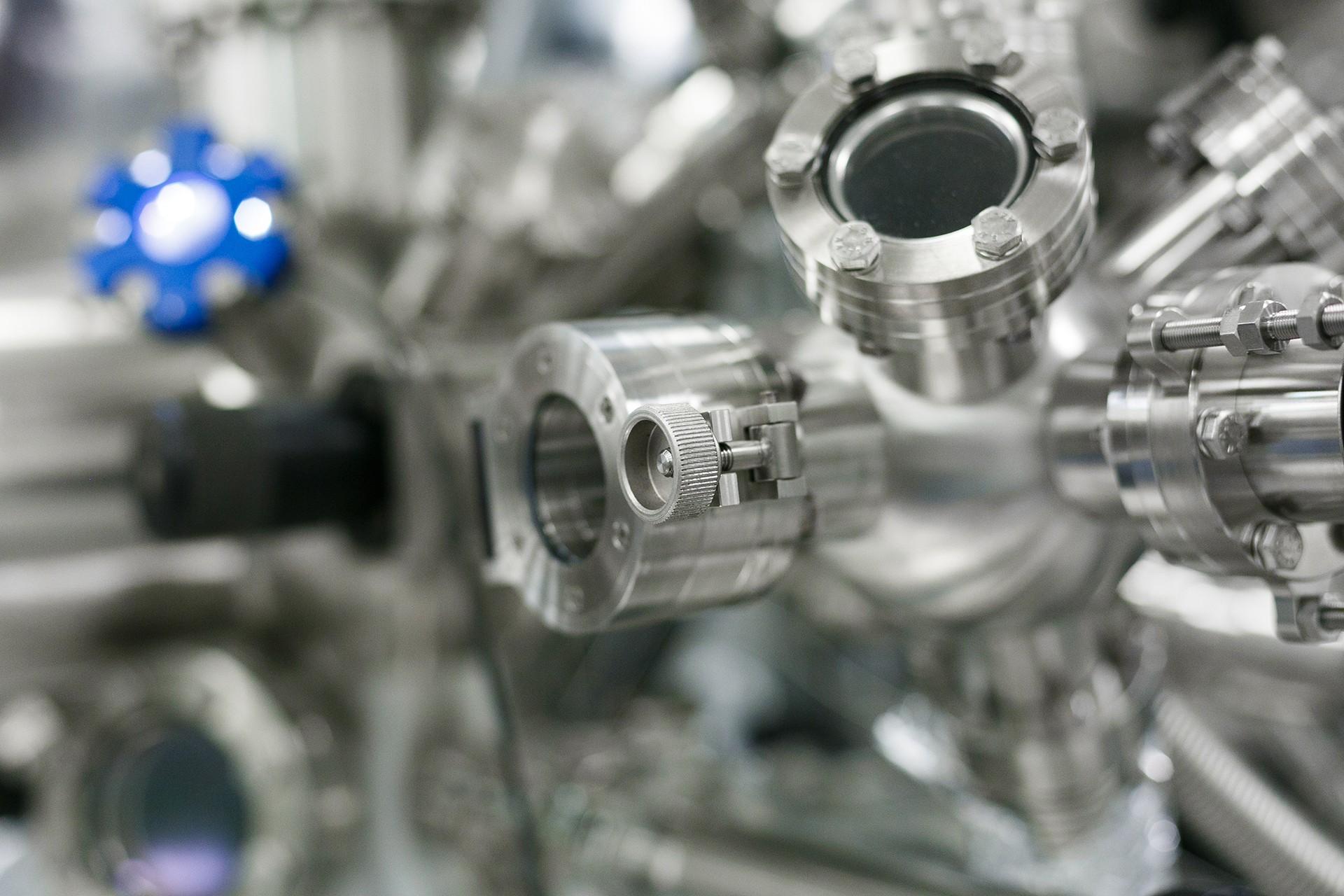

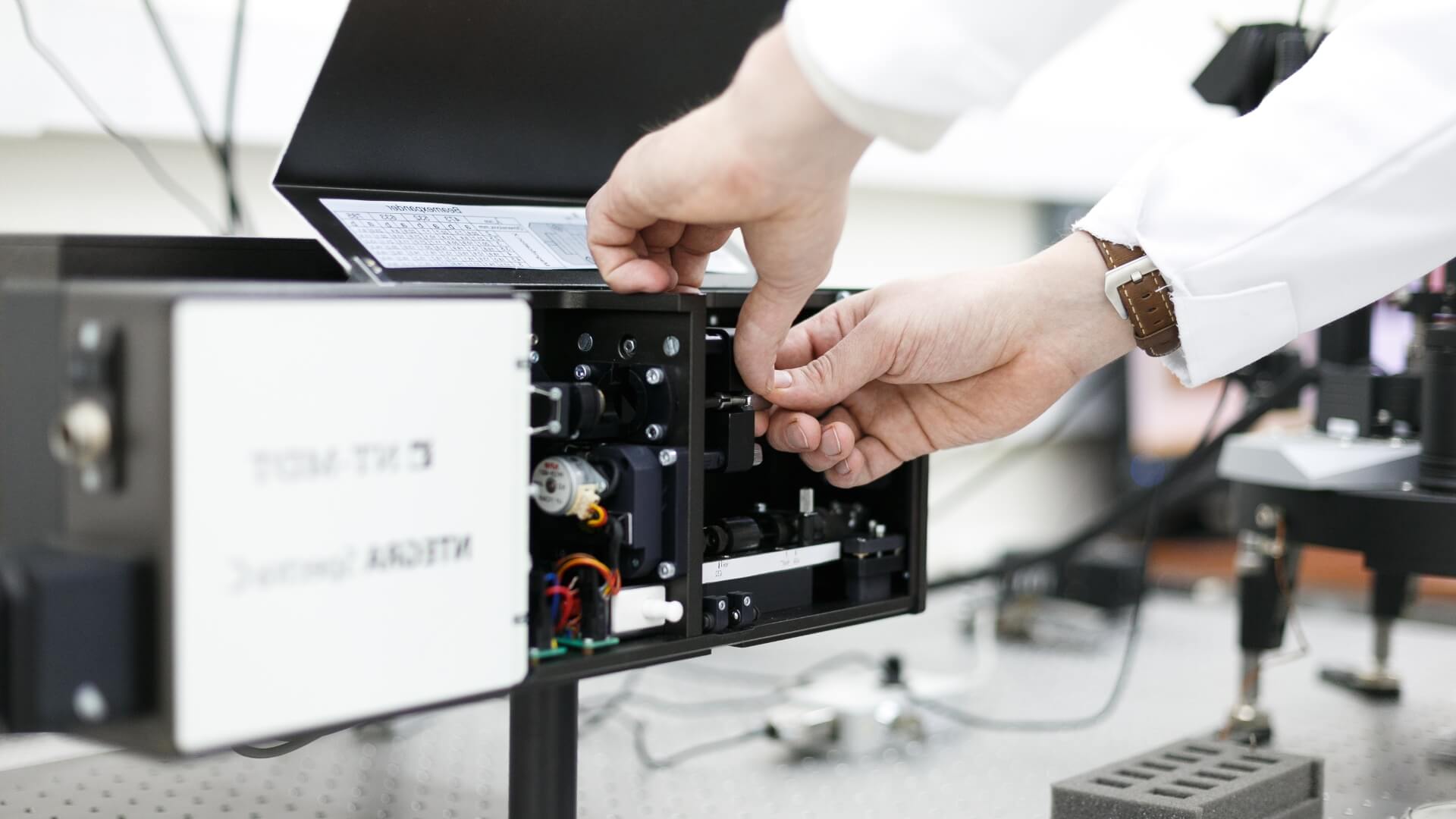

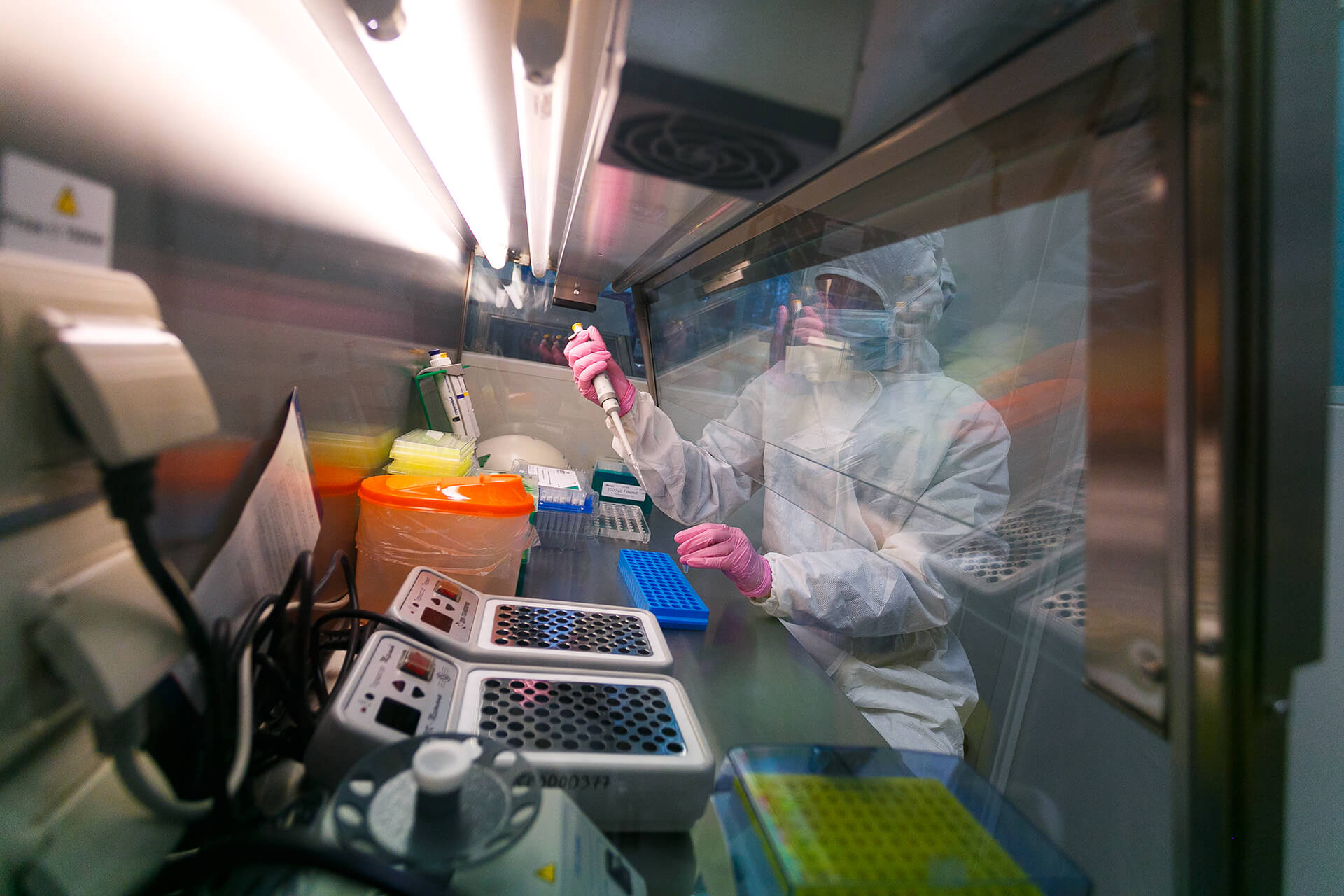

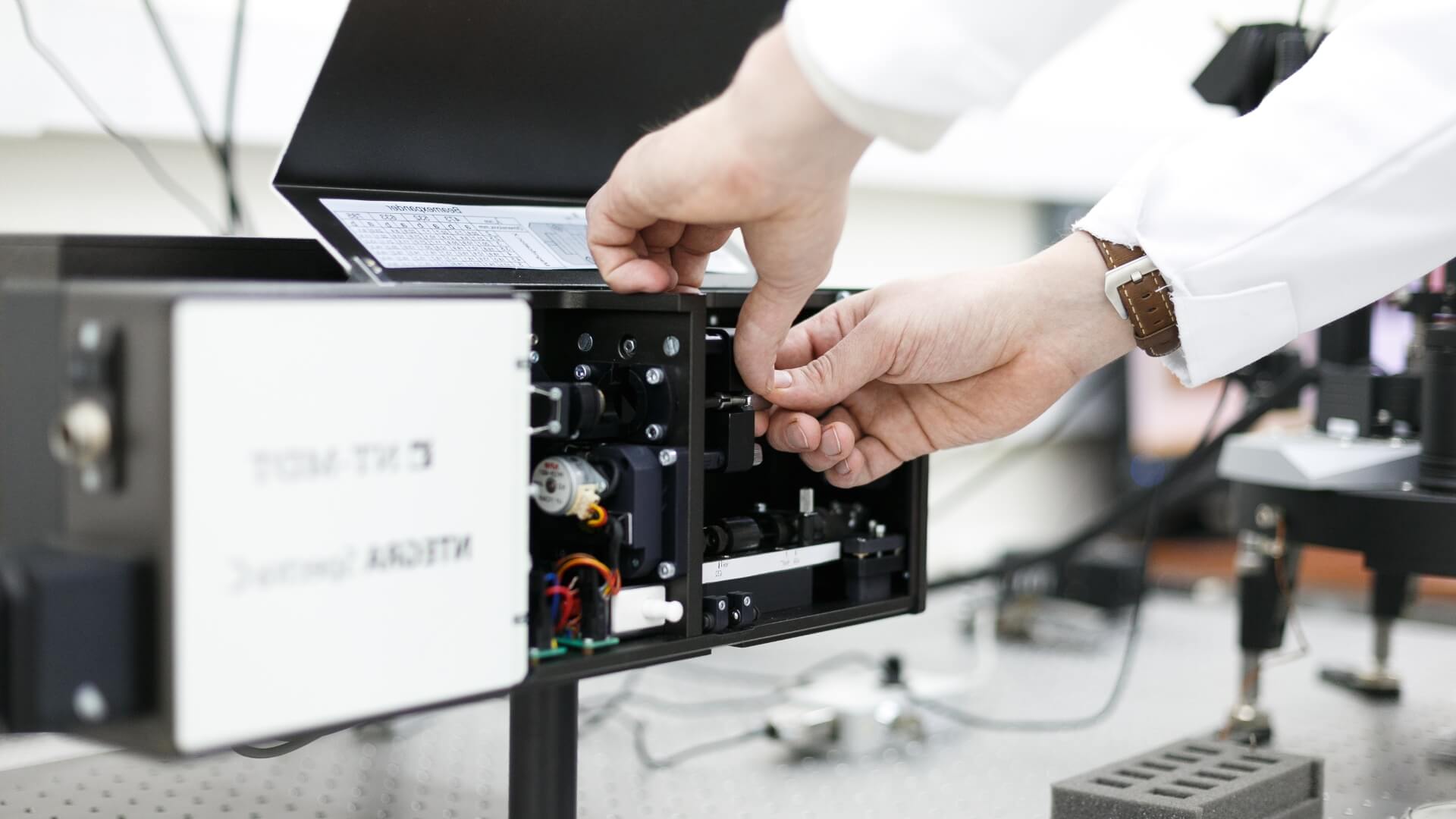

Source: RSF Press Office

“Over the past 6 years since the Russian Science Foundation was founded, the panel chairs assigned about 250 000 reviews. If you count, this is an average of 12 reviews per working day for each panel chair – an extraordinary work! And about 40% of these review invitations are wasted – the reviewers do not accept to review, finding themselves busy or sick, rejecting for various reasons. As a result, the work of the panel chair is simply wasted,” Blinov complained. – Furthermore, naturally, when several thousands reviewers are invited, human errors begin. Finally, these hard-working people are also accused by researchers of assigning the desirable reviewers to the “necessary” applications.”

This year, it was decided to automate this step in order to optimize the time of the panel chairs and to reduce the potential biases of subjective factors in the evaluation of the application. The procedure for selecting reviewers was implemented step by step, with more and more parameters automated. In 2014, in the first year of the RSF operations, the internal analytical system used only classifier codes to select the pool of reviewers proposed for the panel chair for the certain application. For example, 01-105 (topology). In 2015, the system began to take into account some conflicts of interest, for example, not to offer reviewers from the same organization where the applicant is employed. Later, a restriction for reviewers with competing applications was implemented so that they would not be able to decide the fate of the competing applications.

In 2016, the current review load was taken in consideration. Empirically, the maximum review load was set - no more than 10 simultaneous project applications for review per reviewer. This helps to avoid a situation when a reviewer evaluates the application in a hurry and carelessly, ensuring the high quality of assessment. Although, there are reviewers who evaluate one application poorly for the long period of time while others assess dozens of them quickly and in good quality. Now, in most cases, the reviewer is evaluating up to five projects at the same time, although if a person quickly completes assessment of one of them, more will be available for review. In addition, more recently, the reviewer got the opportunity to determine the maximum of applications can be in review simultaneously. If the reviewer believes that no more than five applications can be reviewed at once, the computer will not invite him to evaluate the sixth application.

The automatic reviewer assignment works in two stages. First, the system selects 50 - 100 potential referees for the project. The computer eliminates inappropriate candidates based on the limits set for their review load (up to 10 proposals), checks their affiliations with host organization, compliance with classifier codes and keywords, and competing applications. Then the algorithm ranks all candidates according to the coincidence of their research interests with the topic of the project and the level of their review load, and invites the respective reviewers for the application. All numerical parameters of the selection algorithm can be specified further. For example, to adapt to a very specific or, conversely, to a wider research field.

This approach, compared to the traditional one, allows to speed up the process of inviting reviewers dramatically. In consequence, reviewers will accept or reject invitations to review more quickly, so that overall speed of review process will increase, because the computer quickly reassigns reviewers in case of their rejections.

Source: RSF Press Office

If one compares manual assignment of reviewer with automated one, the evaluation scores turn out to be similar. This means the innovation did not affect the quality of the review. But the polarity of evaluations has somewhat decreased. In 16% of cases, all three reviewers evaluated the application absolutely equally. Of course, three reviewers are selected for each project to take into account diverse opinions, but if the polarity of assessments is too high, this may indicate either a different level of qualification of reviewers, or insufficient methodological work with them. Therefore, each case with polar assessments is considered in-depth by the expert council panels.

With AI-assistance, the number of rejections to review has increased a bit. True, but if you look at the reasons of rejections, you can understand that by the criteria of conflict of interest and inconvenient terms, this number, on the contrary, has decreased. The computer does not know the competence of reviewers as much as the panel chair does, but it is much more efficient in thorough checks of affiliations.

At present, the automatic reviewer assignment is not compulsory. Any panel chair can continue to invite reviewers manually. But in the evidence-based decision-making the first results of AI-deployment are promising. The percentage of objections to review felt from 0.32% to 0.28%.

However, some representatives of research community expressed the concerns about the use of AI-assistance in review. Firstly, Academician Vadim Kukushkin warned there was no need to proceed to a fully automated review assignment, because if the system fails or is attacked, the review results would be greatly distorted. In addition, the information in reviewer profiles needs to be updates and kept accurate such as their classifier codes, research interests, keywords. The issue of “negative” and “positive” reviewers has not yet been resolved fully. However, the RSF plans to develop a system for decoding the probable evaluations of its reviewers in order to make the assessment of one application by the diverse pool of “negative” and “positive” reviewers even more objective.

The most important - and yet unresolved - problem today is the size of the pool of reviewers that consists of 5000+ Russian and 1500+ international reviewers. Not all of them are active and have outstanding scientific achievements. The RSF regularly invites the leaders of the projects funded to become the reviewers, but still this is not sufficient.

However, according to the chairman of the expert council for research projects, academician of the Russian Academy of Sciences Alexander Klimenko, the automated reviewer assignment system has achieved its main goal: “In 2017, everyone complained that while discussing objectivity and transparency in review, a few concrete people in the RSF expert council (panel chairs) had super powers with regards to the evaluation process. They could influence the assessment of any application and help it jump to the “kings row”. Now, we will not face such risks any longer,” he concluded.